Radiometry

Infinite Studio enables real-time spectral in-band visualisation with consumer-grade hardware.

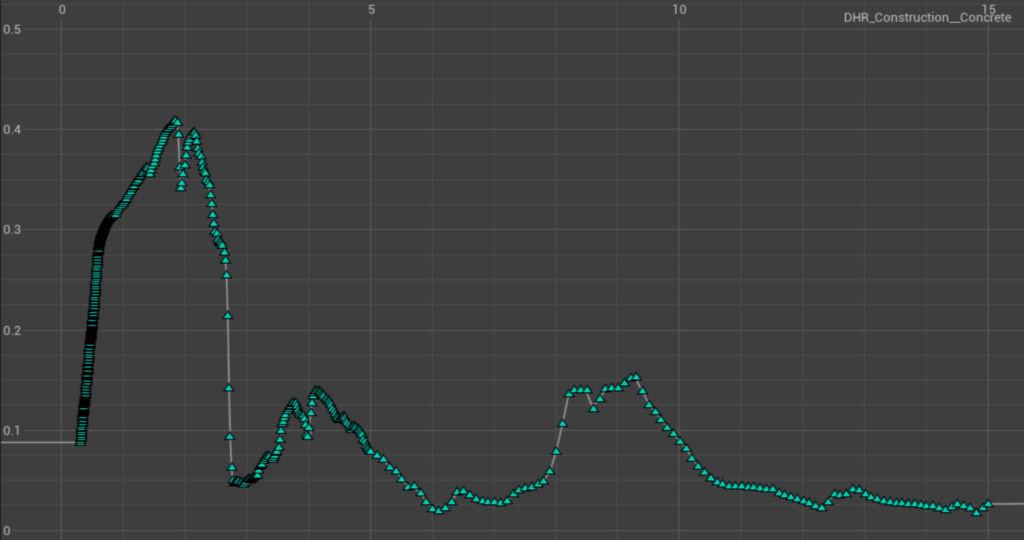

- Materials are defined using real data for spectral reflectivity, emissivity, transmission, and roughness

- There is a large Spectral Material Library available with Infinite Studio from a variety of data sources, including publicly available databases such as ECOsystem Spaceborne Thermal Radiometer Experiment on Space Station (ECOSTRESS) Spectral Library, which encompasses the Advanced Spaceborne Thermal Emission Reflection Radiometer (ASTER) Spectral Library

- Infinite Studio has multiple custom nodes which have been programmed to allow the integration of Spectral Materials into the existing UE4 material system

Example curve of Concrete material

Screenshot of example Concrete material applied to asset

- Infinite Studio currently supports MODTRAN for atmospheric calculations

- The following data is computed in-band:

- Transmission and Radiance (Scatter & Thermal) lookup tables for applying to the scene in real-time

- Solar and lunar irradiance for applying sun and moon directional light source intensities

- Ambient sky lighting (global illumination)

- Fully Volumetric Cloud modelling using MODTRAN to obtain transmission and path radiance values across cloud density

- Supports lighting, transmissions and secondary effects such as cloud shadows and cloud reflections

- User customisable Weather and Noise Maps for cloud shape

- Adjustable coverage levels, cloud altitude, wind speed, cloud density and many other parameters in editor

Volumetric Cloud Coverage varying from 0-1 in Infinite Studio

- In-band thermal emissions are evaluated by spectral integration

- Grey body and selective radiators are implemented using spectral emissivity data

- Custom spectral emission curves are imported for non-blackbody emitter

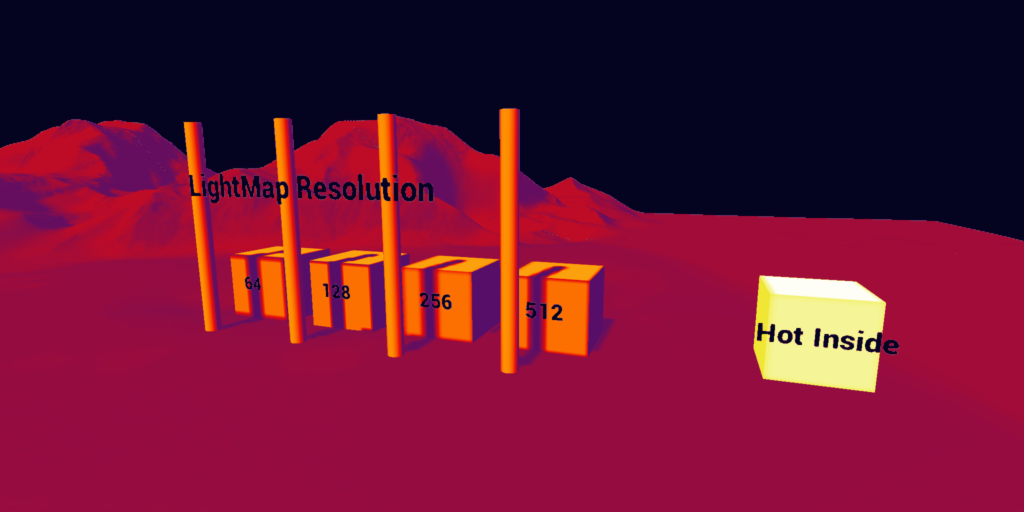

Example of Thermal Modelling Demo landscape

Camera and Sensor Modelling

Camera Objects render multispectral scenes with properties for filmback, lens and sensor response.

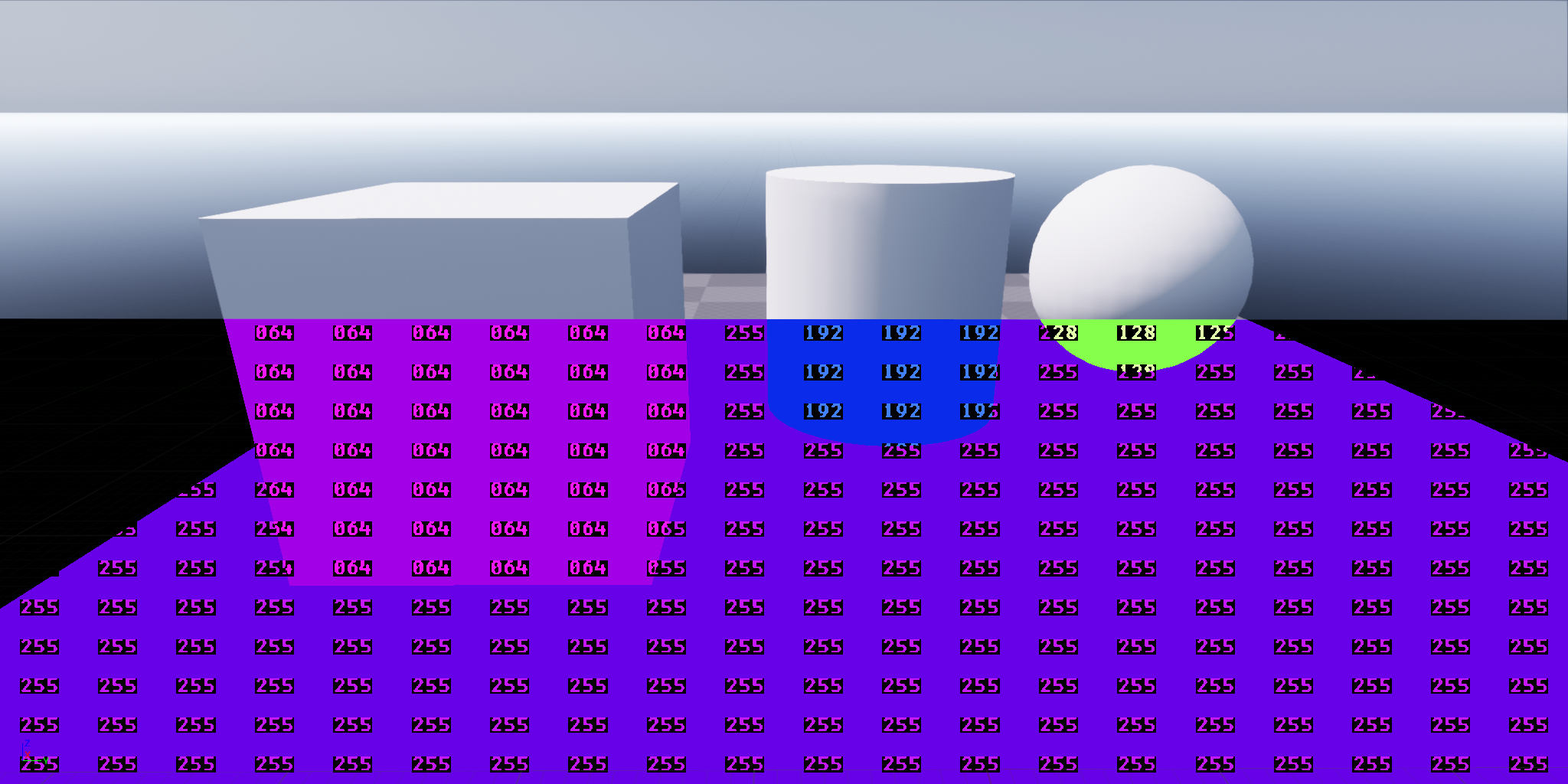

Stages of the In-Band Rendering Equation Solver

- Render Radiance Image: Detector Spectral Response, Resolution

- Radiance to Photons conversion: Pixel Pitch, F-Stop, Shutter Speed, Lens Transmission

- Photons to Electrons conversion: Quantum Efficiency, Full Well Capacity

- Electrons to Counts: ADC Gain & Offset, ADC Bit Depth

At each stage of rendering, applicable noise sources are simulated and can be customised:

-

- Dark Shot Noise: Dark current flows even when no photons are incident on the camera

- Read Noise: Electronic Signal Noise resulting from sensor design

- Photon Shot Noise: Statistical noise associated with the arrival of photons at the pixel

- Fixed Pattern Noise: Caused by spatial non-uniformities of the pixels

- Aliasing occurs when the rasterization process during rendering of a scene does not apply enough samples to a target resulting in scintillation artefacts

- Long range targets subtending few pixels could wildly vary in intensity as sample points hit and miss the target all together

- Zoom anti-aliasing is effectively rendering a part of the sensor image, a window, at a much higher resolution and averaging the image down to the original resolution, resulting in a more accurate distribution of energy

- The advantage of this approach is that anti-aliasing can be adaptively applied to parts of the scene where it is needed optimizing performance by not applying it where it is not

Multispectral renderings from Infinite Studio of an example asset

L-R: Visible, NIR, MWIR, LWIR

External Control

Infinite Studio connects to your third-party virtual and constructive simulation environment.

- Available in C++, Python, MATLAB and Java, the External Control API supports full control of the simulation, via TCP/IP, including commands to:

- Simulation Control (Begin, End, Propagate)

- Querying available assets by path, or type

- Open and Load levels

- Spawn, move & destroy actor assets and

- Custom message types

- Infinite Studio provides multiple example scripts, demonstrating the most commonly used features

- Trajectory: Trajectory Spline Actor provides actor scripted 6-DOF motion via time referenced dynamic state data.

- Recording Tools: Record actor and sensor image data, for single or multi-runs, useful for post run analysis in third-party tools.

- Waypoints: An experimental waypoint capability has been added for air, land & maritime platforms and includes infantry soldiers. Waypoints can be imported from CSV files, manually entered or added at runtime. Users can specify many options covering waypoint actions, including initial speed, speed between points, and end of course behaviour.

Video rendering of the Jet Boat following an example Waypoint path

- Target Labelling: Rendering of scenes with target pixel (stencil) labelling for training of neural networks, machine learning or data analytics tasks

- Loop Controller: Orbits a camera about a given target at varying ranges writing captured image data to file. Customisable loop control for changing additional scene features; such as the environment (atmosphere, time of day, sea sate, etc.)

Example of a scene in Standard view combined with the Stencil Label visualisation

Maritime-Based Physics

Modify real-world ocean model surfaces in real-time with wind, fetch length, ocean depth and other inputs.

- Emissions: Based on Planck’s blackbody radiation equation for a specified ocean temperature

- Reflections: Reflectance varies directionally according to the Fresnel Law of reflection

- Transmittance: Optional transparent material applies translucent fogging to submerged objects based on depth/view angle

- Fully customisable FFT based wave spectrum model of time-varying ocean height fields

- Wave height and chop driven by real world parameters, e.g.

- Wind speed and direction

- Ocean depth

- Fetch length

- Additional user customisation possible ranging from simple amplitude/property scaling through to complete user generated spectrum

- Buoyant Forces: Surface Platforms use a finite element solution to calculate buoyancy and wave motion. Forces are then consolidated to the object’s centre of buoyancy, and corresponding moments are generated to affect the platform’s motion

- Energy Conservation/Momentum Collisions: Each element approximates the change in energy due to the collision between the object surface and the fluid element

- Limitations: Currently the buoyancy model only imparts forces from the fluid to the object (the high fidelity wakes model generates coupled surface wave motion)

- High Fidelity Wakes: An experimental high-fidelity volume displaced wake implementation

- High Performance Wakes: A high performance turbulent (foam) and kelvin wake implementation, allowing hundreds of wakes rendered on screen for real-time solutions

Captured video from Infinite Studio viewport of an ANZAC surface platform

Additional Content and Features

Infinite Studio can provide bespoke content assets already configured with multispectral materials.

As well as demo maps, Infinite Studio provides you with the toolset to create your own real world landscapes

- Street Map Importer: New real word scenes have been developed, with buildings, roads, railways, vegetation as imported from OpenStreetMap and terrain height data from the Registry of Open Data on AWS Global Dataset. You can also create and import your own custom height sampler

- Real World Maps: Urban, rural, forests, grasslands, deserts, mountains, littoral and open ocean scenes can be quickly and accurately generated using Infinite Studio

Infinite Studio can provide the tools to create and edit your own assets, in addition to a library of Unrestricted Assets.

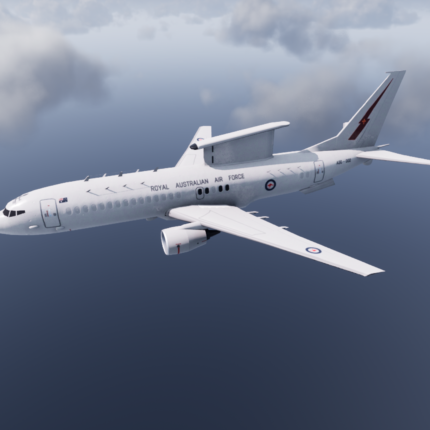

- 3D model library of aircraft, ships, vehicles, weapons, people, infrastructure & nature assets pre-configured with multispectral materials

- See our Blog Posts for content updates

- Multi-player networked scenario management for human-in-the-loop real-time simulations